📥 Collecting Data

The Foundation of Descriptive Analytics

Collecting data is the first and most critical step in any data analytics process. Without reliable, comprehensive, and well-structured data, even the most advanced models and analyses cannot produce meaningful insights. The goal of this stage is to gather raw information that truly represents the phenomena we want to analyze.

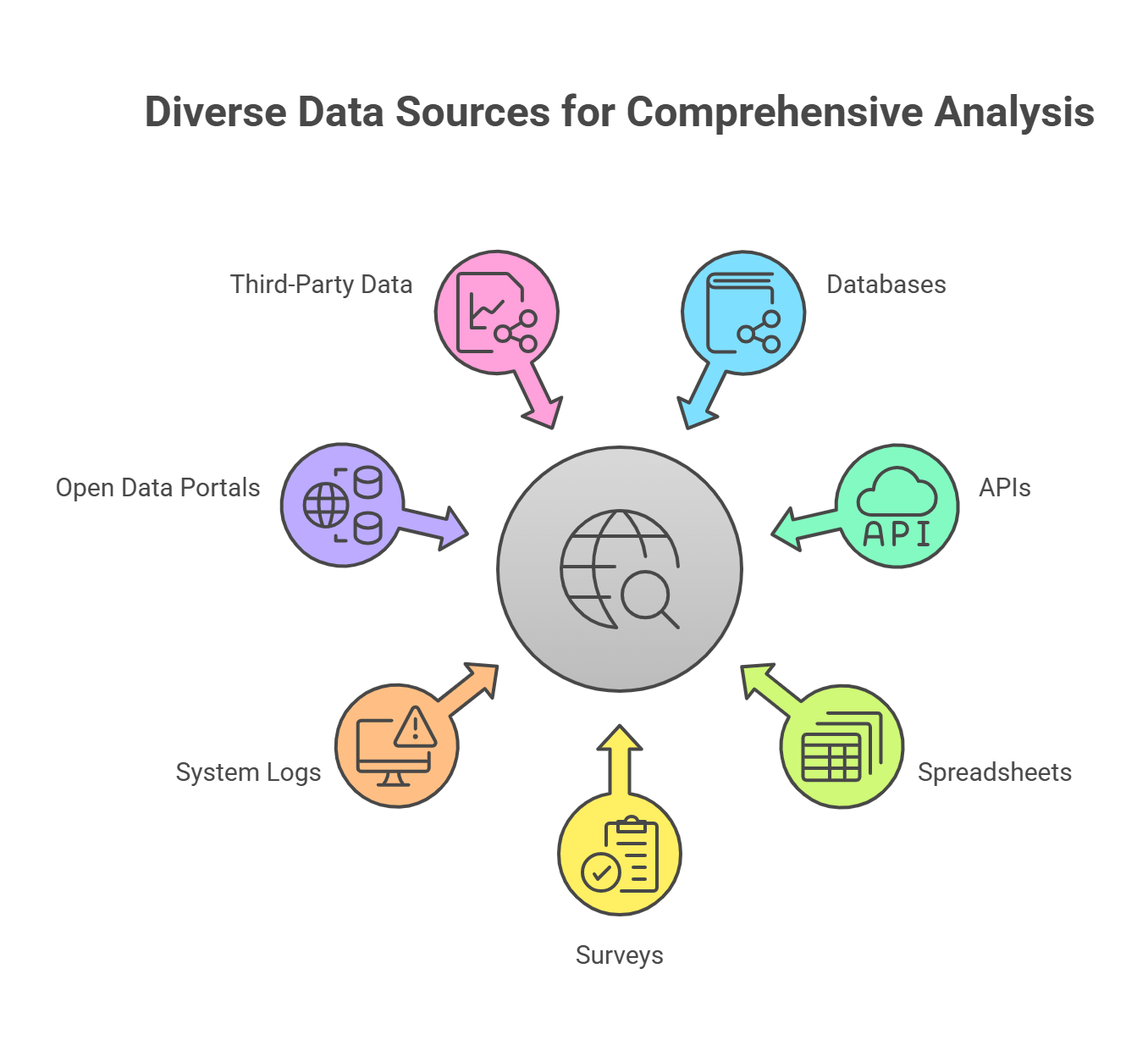

🔎 Typical Sources of Data

- Databases: SQL databases such as MySQL, PostgreSQL, Oracle, and enterprise data warehouses.

- APIs: REST or GraphQL APIs providing data from platforms like Twitter, Google Analytics, or financial services.

- Spreadsheets: Excel or CSV files often used in business reporting and small-scale analytics.

- Surveys & Questionnaires: Data collected directly from users or customers.

- System Logs: Information captured from web servers, applications, or IoT devices.

- Open Data Portals: Government datasets (e.g., data.gov, WHO data) and academic repositories.

- Third-Party Data Providers: Commercial data providers for financial, demographic, or market data.

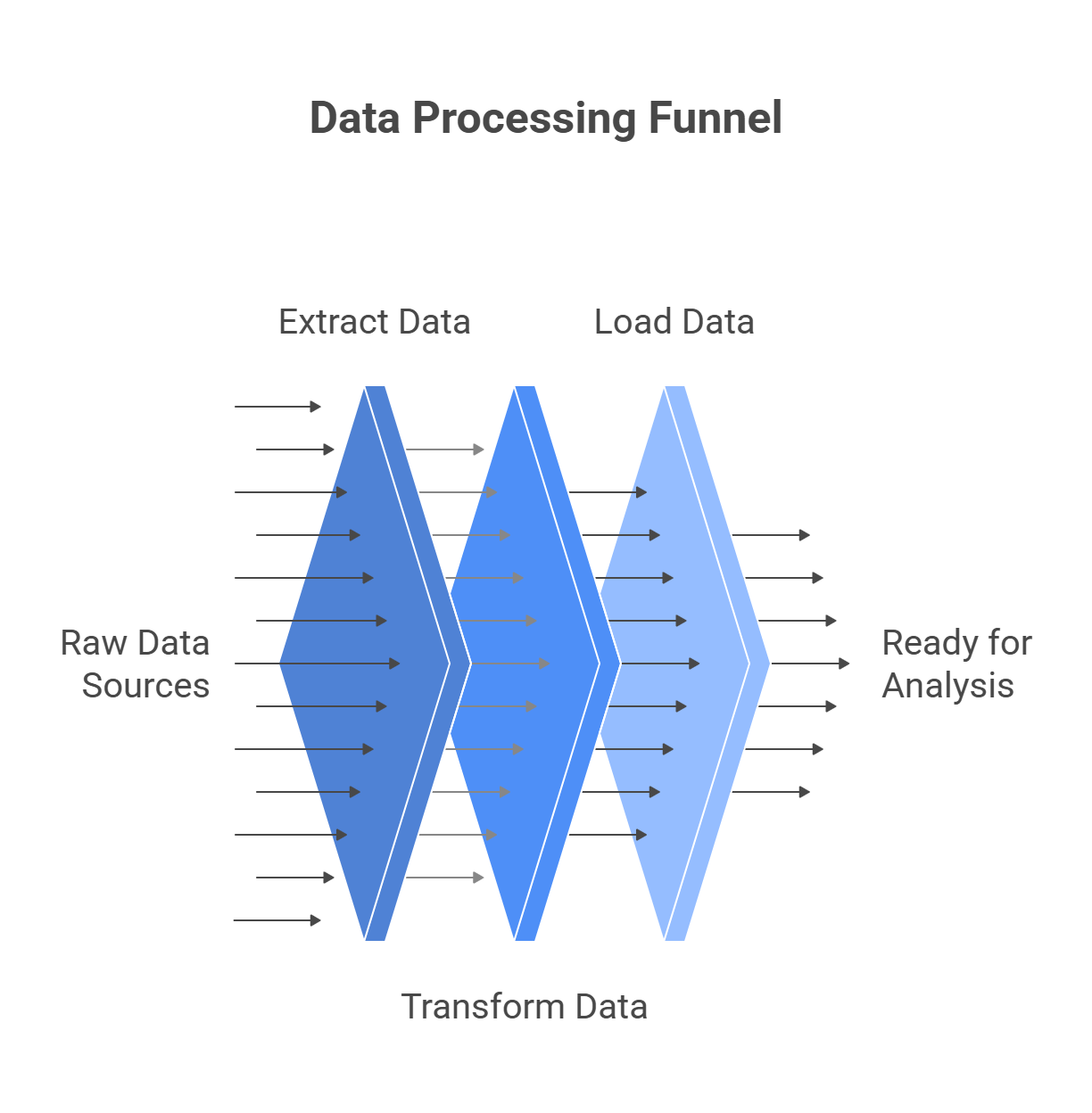

⚙️ ETL (Extract, Transform, Load) in Data Collection

In professional environments, data collection is often part of an ETL process:

- Extract: Retrieve raw data from multiple sources (databases, APIs, flat files).

- Transform: Clean, normalize, and format data into a consistent structure.

- Load: Store the processed data in a target system, such as a data warehouse or cloud platform, ready for analysis.

Tools commonly used in ETL processes include:

- Python libraries: Pandas, PySpark for handling large-scale data.

- ETL platforms: Talend, Apache Nifi, Informatica, Microsoft SSIS.

- Cloud services: AWS Glue, Azure Data Factory, Google Cloud Dataflow.

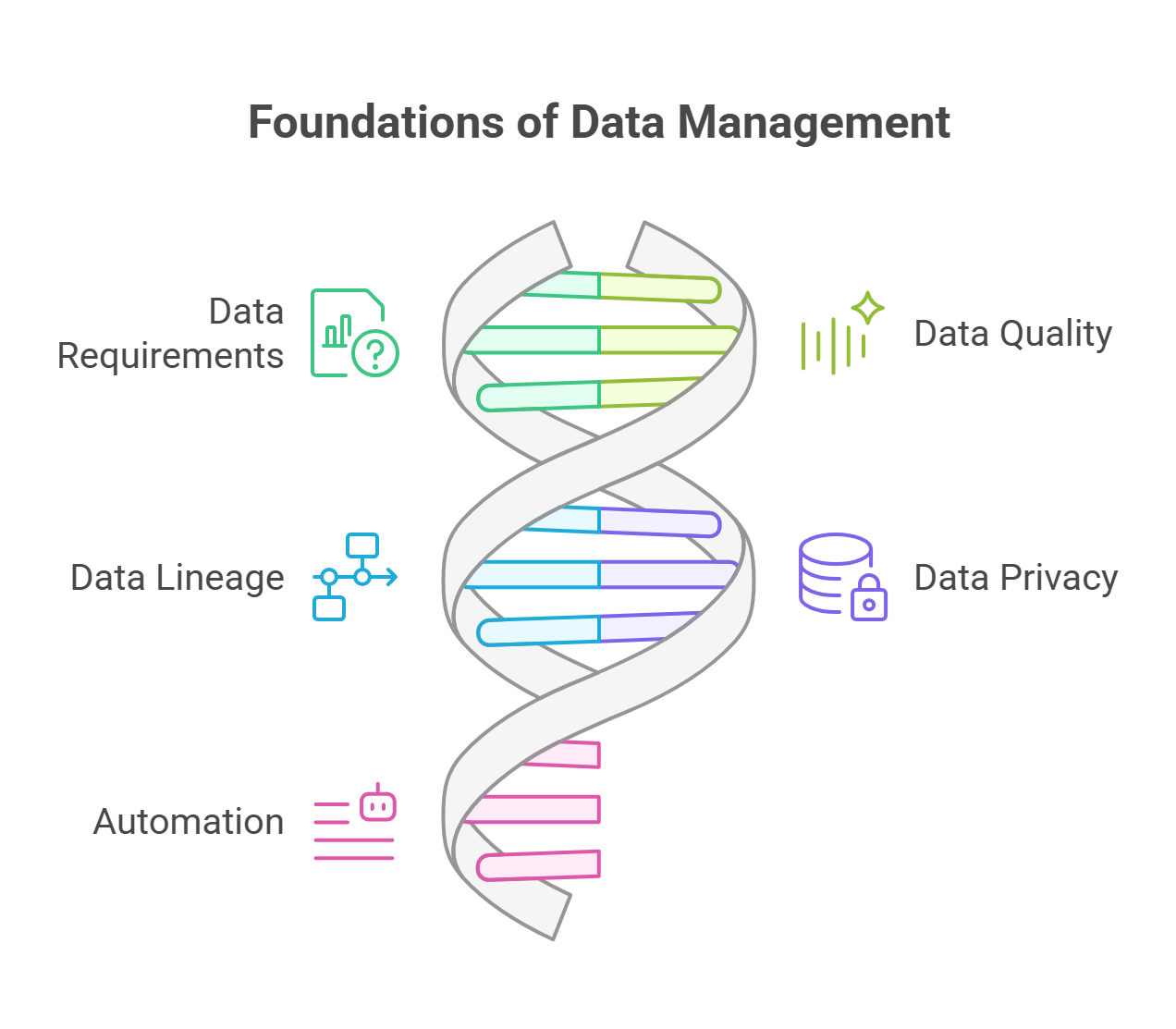

💡 Best Practices

- Define clear data requirements aligned with the business question.

- Ensure data quality by validating formats, completeness, and accuracy.

- Document data lineage: the origin, transformations, and flow of the data.

- Respect data privacy and compliance (e.g., GDPR, HIPAA).

- Automate data collection pipelines to reduce human error and improve efficiency.

📊 Example: Collecting Traffic Data

For a project predicting traffic accidents, data may come from multiple sources:

- Government open data portals (collision reports, road safety records).

- Weather APIs (temperature, precipitation, visibility).

- Traffic sensors and GPS systems provide vehicle flow and congestion levels.

- Historical accident databases maintained by municipalities or insurance companies.

Integrating these sources through an ETL pipeline ensures analysts can work with a unified and consistent dataset.