🧹 Cleaning Data

Turning Raw Data into Reliable Insights

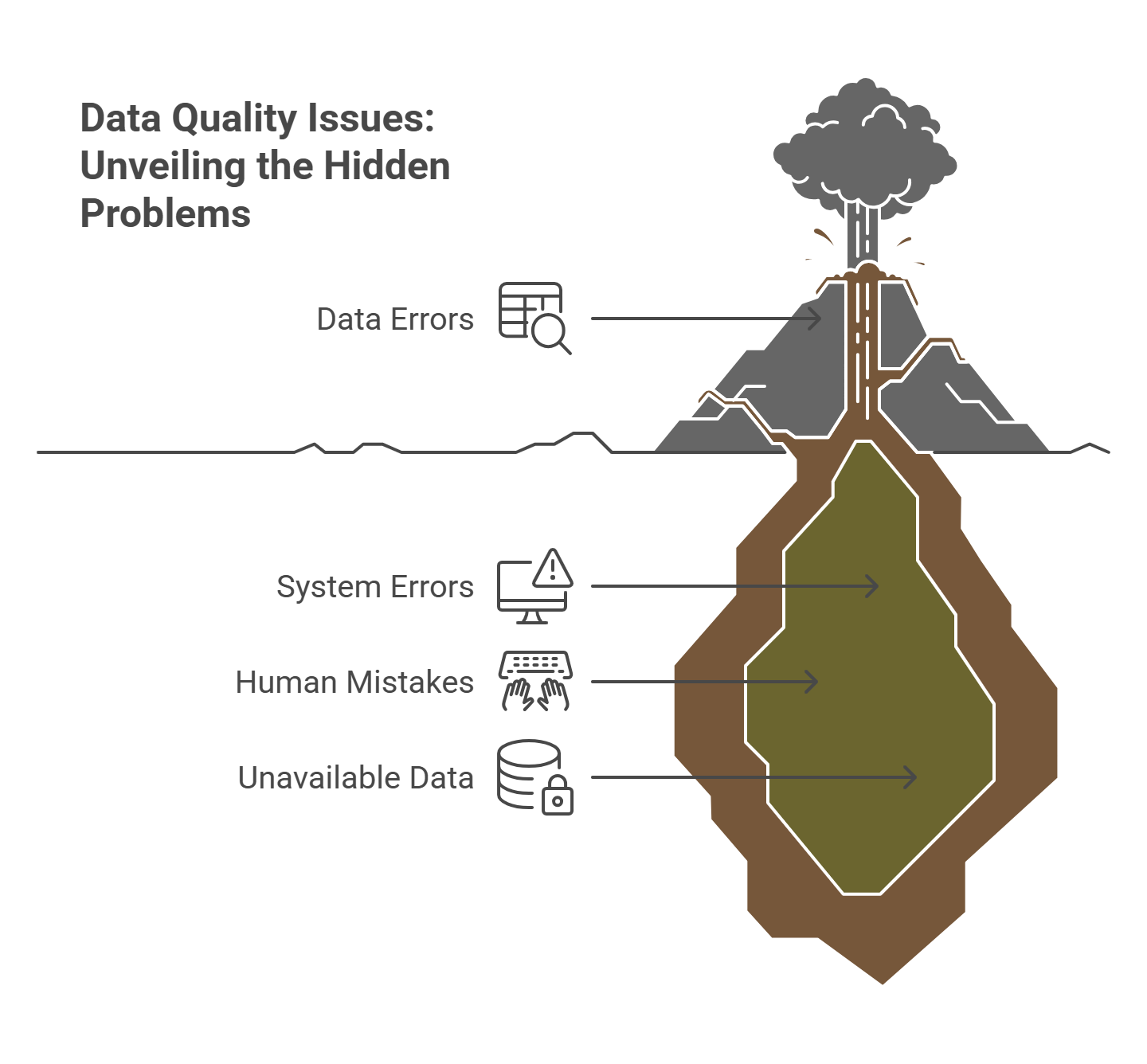

Cleaning data is a critical step in the analytics process. Raw data often contains errors, missing values, duplicates, or inconsistencies that can lead to misleading results if not addressed. The goal of data cleaning is to prepare a dataset that is accurate, consistent, and ready for meaningful analysis.

⚠️ Common Data Issues

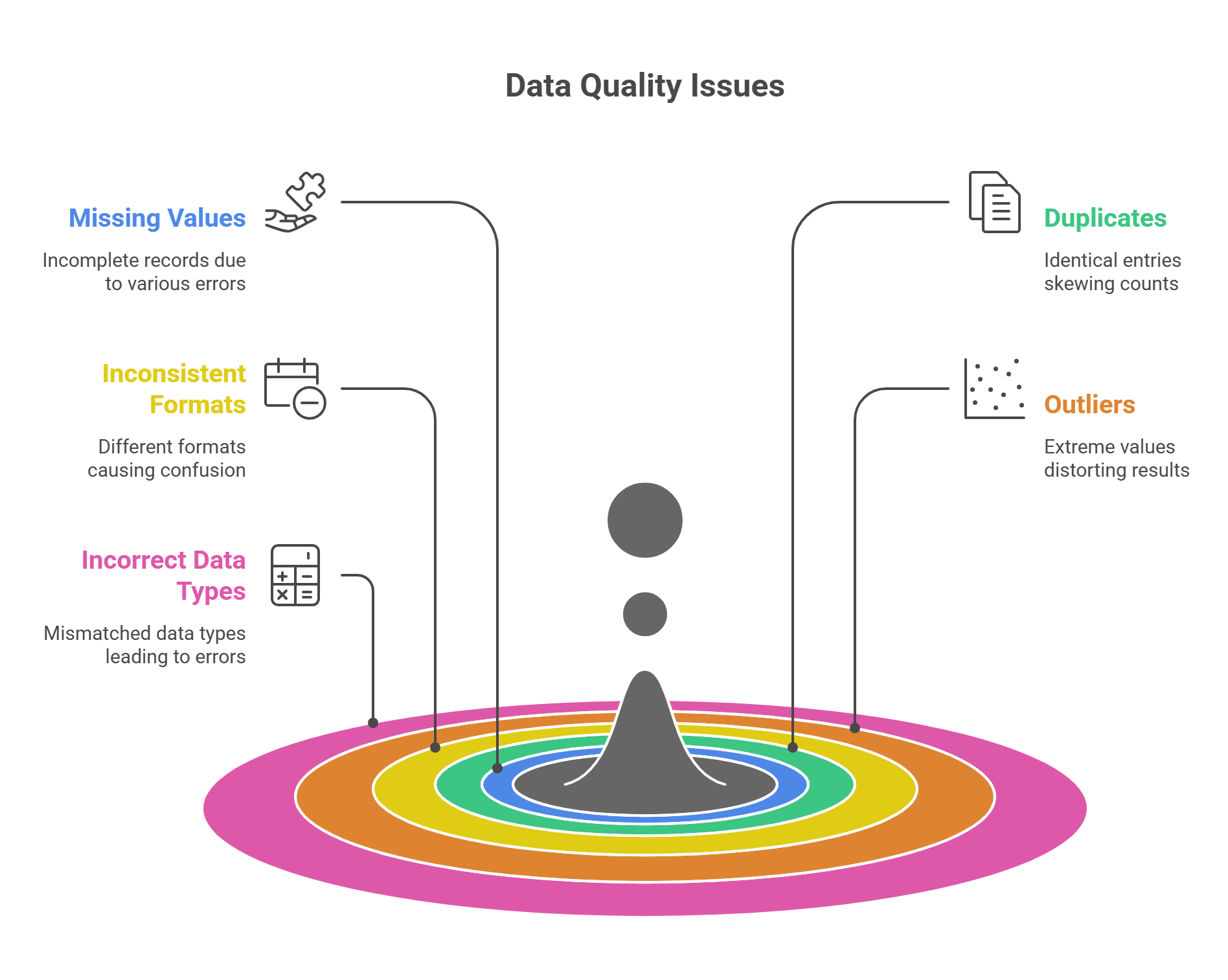

- Missing Values: Incomplete records due to system errors, human input mistakes, or unavailable data.

- Duplicates: Multiple identical entries that can skew counts and averages.

- Inconsistent Formats: Different date formats, units of measure, or capitalization.

- Outliers: Extremely high or low values that may distort results if not evaluated carefully.

- Incorrect Data Types: Numeric fields stored as text or categorical values coded inconsistently.

🔧 Techniques for Data Cleaning

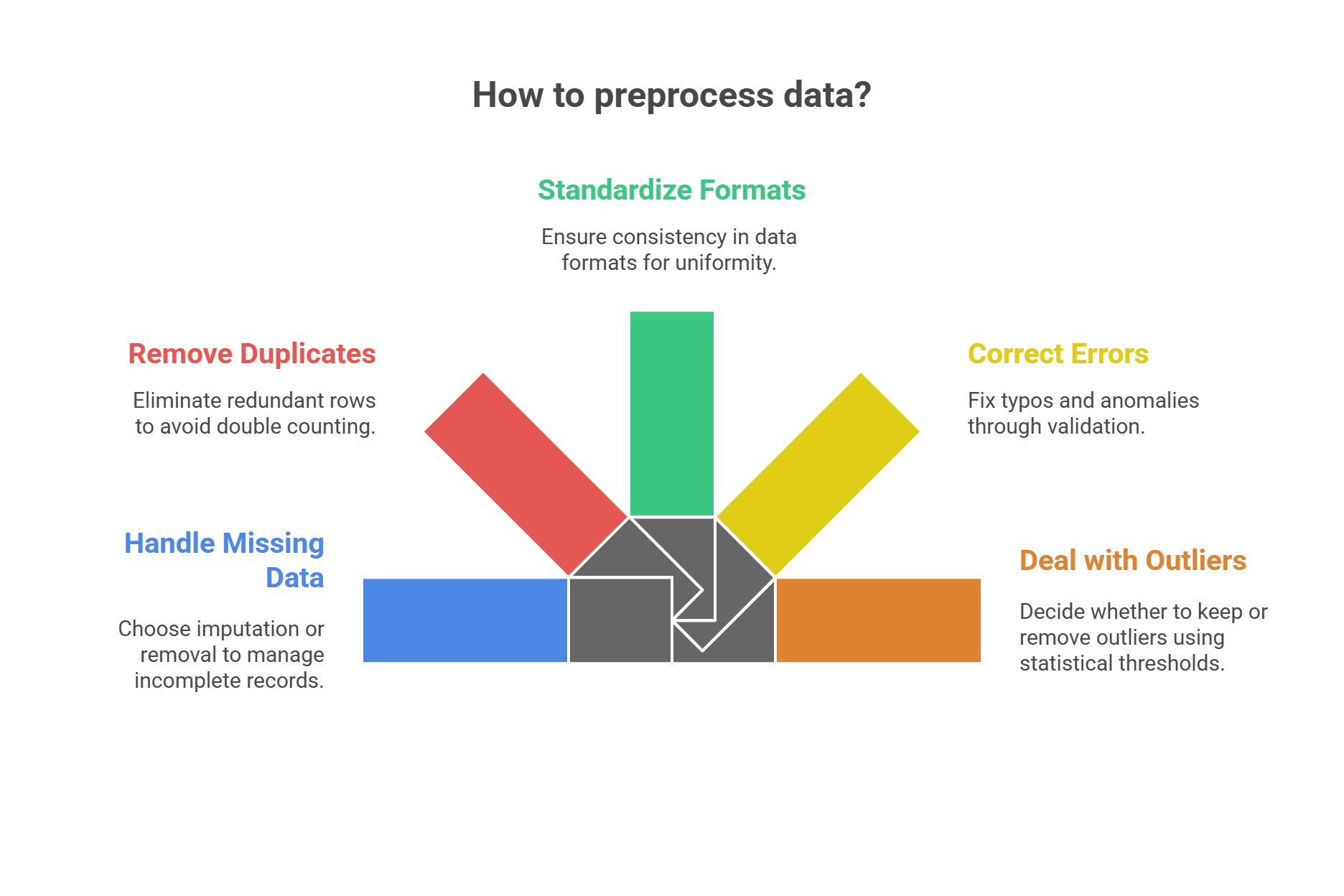

- Handling Missing Data: Options include imputation (mean, median, mode), predictive modelling, or removing incomplete records.

- Removing Duplicates: Identify and delete redundant rows to avoid double counting.

- Standardizing Formats: Convert values into consistent formats (e.g., YYYY-MM-DD for dates, metric units for measurements).

- Correcting Errors: Fix typos, incorrect spellings, and anomalies through validation rules and cross-checking.

- Dealing with Outliers: Use statistical thresholds (e.g., z-scores, IQR) to detect and decide whether to keep or remove outliers.

⚙️ Tools Commonly Used

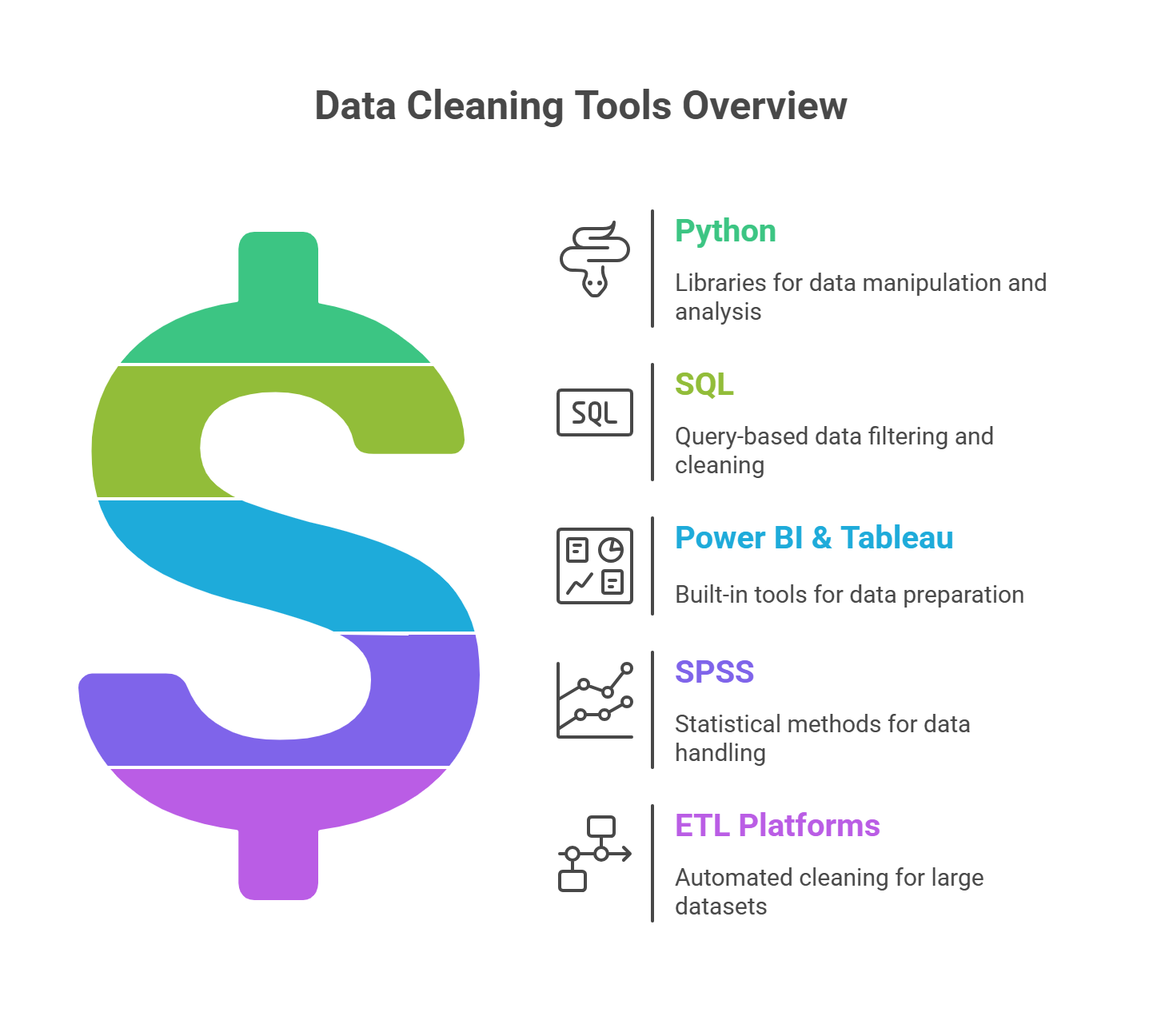

- Python: Pandas, NumPy, Scikit-learn for imputation, cleaning, and validation.

- SQL: Data profiling, filtering, and cleaning using queries.

- Power BI & Tableau: Built-in cleaning tools in Power Query and data preparation workflows.

- SPSS: Handling missing values, recoding variables, and outlier analysis.

- ETL Platforms: Talend, Informatica, or Apache Nifi for large-scale automated cleaning.

💡 Best Practices

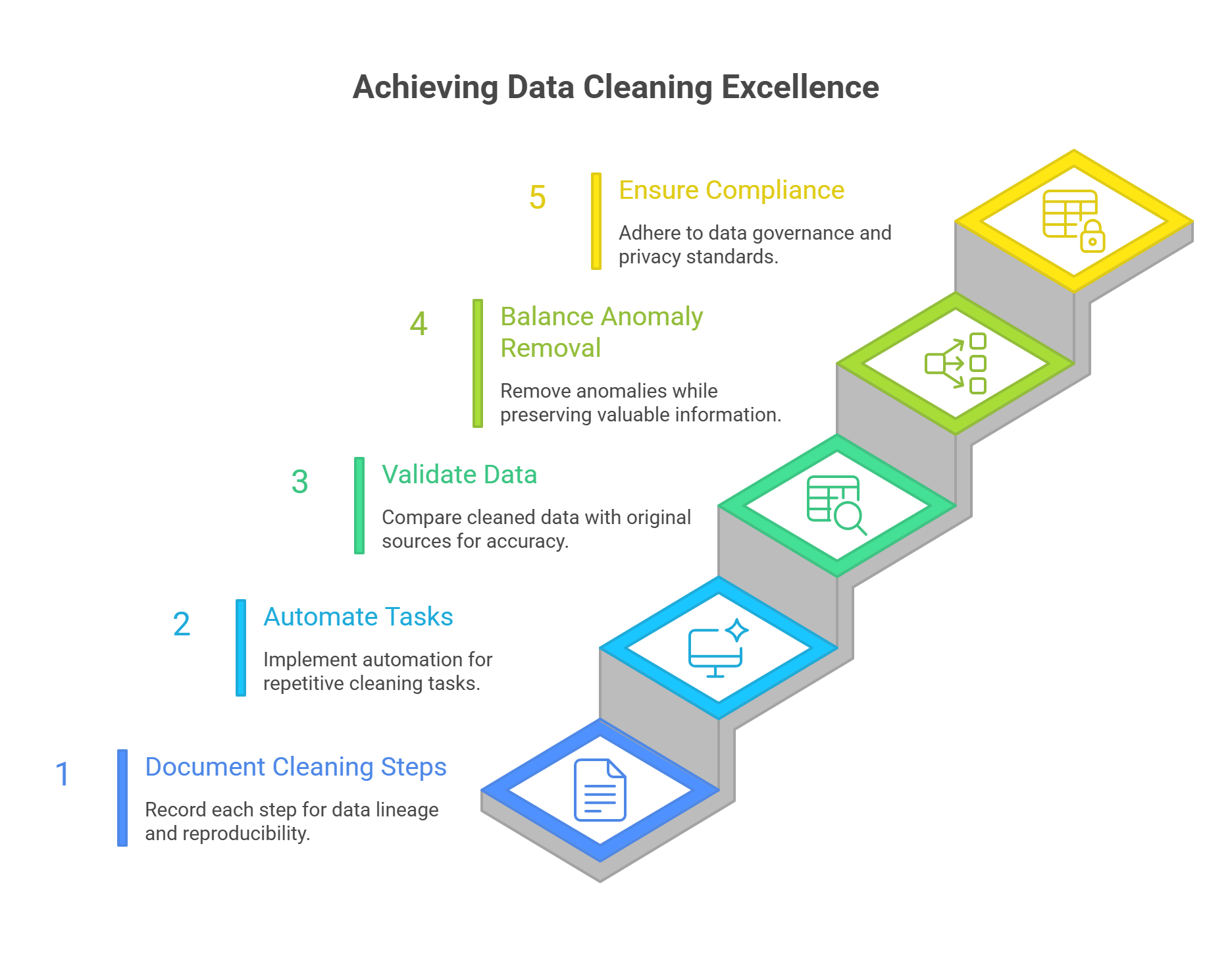

- Document each cleaning step for data lineage and reproducibility.

- Automate repetitive cleaning tasks where possible.

- Always validate cleaned data against original sources.

- Balance between removing anomalies and preserving valuable information.

- Ensure compliance with data governance and privacy standards.

📊 Example: Cleaning Customer Data

Consider a dataset from an e-commerce platform. Cleaning may involve:

- Filling missing postal codes using external reference data.

- Removing duplicate customer records with identical IDs.

- Standardizing phone numbers to international format.

- Correcting inconsistent country names (e.g., “USA”, “United States”, “U.S.”).

- Flagging unusually high purchase amounts for review.